Bielefeld University, Germany (2008-2012)

Advisors: Stefan Kopp, Ipke Wachsmuth, Katharina Rohlfing, Frank Joublin

PhD Student: Maha Salem

First, I pursued a computational approach to understanding principles of motor control in communicative hand and arm gestures by modeling these on a robotic platform, namely the Honda humanoid robot ASIMO. The implemented framework enabled the robot to produce speech and accompanying gestures that were not limited to a predefined repertoire of motor actions but flexibly generated at run-time. Drawing inspiration from neurobiology, the model proposed in my work comprised two features that improve the synchronization process on the given robotic platform: an internal forward model that predicts a more accurate estimate of the gesture preparation time required by the robot prior to actual execution; and an on-line adjustment mechanism for cross-modal adaptation based on afferent sensory feedback from the robot. As a result, synchrony between robot gesture and speech can be ensured during the execution phase in spite of previous prediction errors made during the behavior planning and scheduling phase.

In addition to the technical achievements of expressive and flexible robot gesture generation, I exploited the developed framework in controlled HRI experiments. To gain a deeper understanding of how communicative robot gesture might impact and shape human perception and evaluation of human-robot interaction, I conducted two experimental studies comprising a total of 122 participants. The findings revealed that participants evaluate a robot more positively when non-verbal behaviors such as hand and arm gestures are displayed along with speech. Surprisingly though, this effect was particularly pronounced when the robot’s gesturing behavior was partly incongruent with speech. Besides supporting the pursued approach of endowing social robots with communicative gesture, these findings contributed new insights into human perception and understanding of such non-verbal behaviors in artificial embodied agents.

Research Project: Cross-Cultural Differences in HRI

Carnegie Mellon University in Qatar (2013)

Advisor: Majd Sakr

Research Associate: Maha Salem

During a postdoctoral research stay at CMU in Qatar in 2013 I set out to examine cross-cultural differences in Human-Robot Interaction (HRI) between Arabic and English native speakers. For this purpose, I designed and ran the supposedly largest HRI experiment conducted in the Middle East to date. Inspired by the fact that Middle Eastern attitudes and perceptions of robot assistants are still a barely researched topic, I conducted a controlled between-participants study in Qatar. To explore culture-specific determinants of robot acceptance and anthropomorphization (i.e. attribution of human qualities to non-living object), a total of 92 native speakers of either English or Arabic were invited to interact with the receptionist robot Hala.

Although further research will be required to validate such assumptions, the findings of this study complement the existing body of cross-cultural HRI research with a Middle Eastern perspective that will help to inform the design of robots intended for use in cross-cultural, multi-lingual settings.

Research Project: Trustworthy Robotic Assistants

University of Hertfordshire, UK (2013-2015)

Advisor: Kerstin Dautenhahn

Research Fellow: Maha Salem

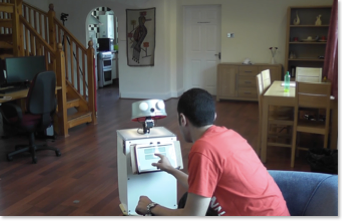

A wide range of robotic assistants are now being developed within academia and industry — from surgery and rehabilitation robots to flexible manufacturing robots — to help humans with everyday activities at work and at home. At the present time, the major challenge no longer lies in producing such robotic helpers, but rather in demonstrating that they are safe and trustworthy. As part of an EPSRC Fellowship, my research at the Adaptive Systems Research Group, University of Hertfordshire, aimed to specifically investigate the determinants that lead people to trust a robot and perceive it as safe.

As a specific focus of my research, I designed and conducted user studies to gain insights into the dynamics of human-robot trust in homecare scenarios. In this context, one important aspect dealt with the question what kind of (and how many) errors a robot can make without losing the user’s trust. In addition, I explored whether certain types of collaborative tasks require more trust than others, and if so, which factors may influence human judgement in critical HRI situations.

The findings of this research will bring us one step closer to the introduction of robotic helpers into the homes of those who may benefit most from their assistance.